In Word, a non-breaking space can be inserted by pressing shift+ctrl+space. Unfortunately it is not available in many other programs, including online content-management systems. By running this script, the shortcut will be available in other programs.

Category Archives: Computing

Welcome / Bienvenue / Benvinguts / Bienvenidos For information about my translation services, please visit the main site. Pour des informations sur mes services, merci de regarder le site principal.Para información sobre mis servicios de traducción, visite el web principal.

Anglo Premier now translates LaTeX documents

In addition to the dozens of formats Anglo Premier already works with, we are now able to translate LaTeX files. We use a special filter that protects the code used in the LaTeX document, which means we can guarantee that we won’t spoil any of the code so that you will be able to compile the final document in the target language. Contact us if you need a LaTeX document translating.

Translating Latex files in MemoQ

LaTeX is a format that uses tags to enable authors to not have to worry about typesetting their text. Unfortunately, at the time of writing there do not seem to be any CAT tools that accept this format. However, MemoQ lets you label tags using regular expressions. We have created a filter for importing LaTeX files into MemoQ. If you normally use a different CAT tool, you could import your file into MemoQ (you can use the demo version for up to 45 days) then export it into a format you can use in other CAT tools (for example, the XLIFF format).

To use the filter, download it here. Next, go to the Resource console in MemoQ and click on “Filter configurations”. Click “Import new” and add the file you just downloaded.

Now all you need to do is go to “Add document as” in a MemoQ project, click on the “Open” button in the Document import settings window and select “Latex all” from the list.

Conversion of Déjà Vu memories into MemoQ memories

If you export a Déjà Vu (DVX) memory or terminology database and import it into MemoQ, you lose some of the data such as the client, subject, project, user name and creation date. This is because the tmx format created by DVX does not match the tmx format created and understood by MemoQ. For example, Déjà Vu has separate creation dates and user IDs for the source and target, whereas MemoQ has a single creation date for a translation pair (which makes more sense). Also, the tmx created by DVX contains the subject and client codes, not the actual names. For example, if you used the subject “33 – Economics” in DVX, you will be importing the number “33” as the subject, not the word “Economics”. Similarly, if you used client codes, like “MST” for “Microsoft”, you’ll be importing the code rather than the full name.

Anglo Premier recently migrated from Déjà Vu to MemoQ. After much labour we succesfully converted our translation memories and terminology databases, preserving all the subject and client data and the dates. We initially described the process on this blog, but the procedure is complicated to follow and the script we created won’t run properly on all versions of Windows. It also requires the user to have Excel and Access 2003. Instead, we are offering to convert your translation memories and terminology databases for you. For a fee of €20 or £16.50 we will convert a translation memory or terminology database, and for €40 or £33 we will convert up to four databases. None of the content of your databases will be read and we will delete the databases from our system as soon as the conversion has been done and the file(s) have been sent to you.

If you wish to use this service, please contact us via the contact form on our main website.

Machine translation and context

A sentence I’ve just translated is an excellent example of the advantages and disadvantages of machine translation. The original sentence said this:

Los rankings se basan en indicadores sociales y económicos.

Google Translate offered this:

The rankings are based on social and economic indicators.

This is a good example of how machine translation can speed up the translation process. The translation is almost perfect. I say almost, because the translation doesn’t quite work in my context. The reader is left asking “Which rankings?”.

The original sentence is actually talking about rankings in general, rather than any specific rankings. Unfortunately Spanish does not make this distinction in the use of articles, so the word “los” is needed whether talking about rankings in general or specific rankings referred to earlier in the text. Google Translate works sentence by sentence, so it has no way of knowing whether the word “the” should appear at the beginning of the English translation.

Another similar problem comes up when I translate biographical texts. Imagine a sentence in Spanish that says the following:

Nació en Tolosa en 1960, pero desde 1970 vive en Roma.

Is Tolosa referring to the city of Toulouse in the Languedoc region (Tolosa is the traditional Spanish spelling of the city) or the small town in the Basque Country? OK, so I’ve deliberately come up with an ambiguous place name, but the other problem in this example does occur more often: is the text talking about a male or female? Google Translate has no way of knowing, since it only looks at the context of the sentence. It normally chooses a sex seemingly randomly. In this particular example it has produced a translation that does not specified the sex of the person:

Born in Toulouse in 1960, but since 1970 living in Rome.

Although it has avoided assigning a sex to the person the text is talking about, the translation is unacceptable and would need considerable editing. By changing the sentence slightly I can force Google Translate to assign a sex:

Nació en Tolosa en 1960, pero desde 1970 vive con sus padres en Roma.

Born in Toulouse in 1960, but since 1970 living with his parents in Rome.

The pronoun “his” is used, but the person we’re talking about could just as well be female. As additional evidence that Google Translate doesn’t use context I will now ask it to translate the following two sentences together:

Julia es una ilustradora francesa. Nació en Tolosa en 1960, pero desde 1970 vive con sus padres en Roma.

Google Translate provides the following translation:

Julia is a French illustrator. Born in Toulouse in 1960, but since 1970 living with his parents in Rome.

Google Translate still uses the word his, yet to any human translator it is blatantly obvious, thanks to the context of the first sentence, that the correct pronoun is her.

Running Dragon 11 on 64-bit Windows XP

Nuance say Dragon is not compatible with 64-bit Windows XP. The installer aborts if you try to install it on this system. But there’s a workaround! Simply follow the instructions found here, but omit the part telling you to change the properties of Audio.exe to run in XP compatibility mode, since you’re already running XP (the blog was written to explain how to run Dragon in 64-bit Vista).

Many thanks to Larry Henry for explaining this workaround.

Finding acronyms in Microsoft Word

To find acronyms written in all caps in Microsoft Word, use the following search line (including the angle brackets) with wildcards activated:

<[A-Z]{2,}>

To find only plural acronyms, change it to this:

<[A-Z]{2,}s>

And to find only acronyms with an apostrophe followed by an s, change it to this:

<[A-Z]{2,}[’'´]s>

Did the Queen have a sex change? Automatic translation of speech

There have been many articles recently, like this one, about advances in the automated translation of speech, and I’ve even read stories about armies using them. I find the latter news very worrying.

Automated translation of speech basically combines two previously existing technologies: speech recognition and machine translation. The problems with the latter are well publicised, and despite the advances made, the problems remain. Google’s corpus-based translations mean that sentences tend to be more coherent nowadays, but a coherent sentence can also be an incorrect translation.

Voice recognition has come on leaps and bounds recently. I use it myself when translating. But as every user of such technology knows, you have to train it to your voice, and even then it makes mistakes that you have to correct. The article from The Times I’ve provided a link to discusses the problem of understanding “high-speed Glaswegian slang”. Current technology would no doubt be absolutely useless at understanding this. But what about more standard forms of English?

I decided to test how Google’s new speech-recognition tool would cope with the Queen’s English — literally the Queen’s English — a speech made by Queen Elizabeth II to parliament in 2009. As I expected, because the tool is not trained to the individual’s voice, the results are pretty awful. To see the video, click on this link. Pause the video, move your mouse over the “CC” button at the bottom of the video, then click on “Transcribe Audio” (don’t click on “English”, as that just gives you captions provided by a human, rather than the automated transcription), click on OK, and the video begins. The Queen tells us how she “was a man that’s in the house of common” [sic].

We can, if we wish, have these captions translated into another language. Just go to the “CC” box and click on “Translate Captions”, then choose your language. But the machine translation will only translate what it’s asked to translate, so we are still likely to get told that the Queen is a man. The translations into the three other languages I work with begin like this:

Catalan: “Jo era un home que està a la Cambra dels Comuns”

Spanish: “Yo era un hombre que está en la Cámara de los Comunes”

French: “J’étais un homme qui est dans la Chambre des communes”

As you can see, there is a very high risk of misunderstanding when using this technology. If the army wants to communicate with people in other languages, I’m afraid they’re just going to have to hire trained interpreters.

DownThemAll! for corpus-building

For professional translations, see my business website at www.timtranslates.com.

DownThemAll! can be a useful tool for creating a large, relatively clean corpus in a short amount of time. In this article, I shall explain one way of using DownThemAll! via a Google search to create a corpus. This particular example involves downloading the texts from the BBC Food website to create a corpus of recipes, which would be useful for translating and editing texts on food. However, the important thing is the method, rather than the result, so even if you do not think you will use a corpus on food, you may still find it useful to follow through the instructions, since you can then use the same method to download texts from other websites.

The method described in this article requires the use of the Firefox browser. The method was developed using the Windows XP operating system, but should work on other operating systems.

Firefox is needed because we will download the texts using the Firefox extension DownThemAll!. Once you have opened Firefox, if you do not already have the DownThemAll! extension, download it from here. When prompted, restart your browser (the browser should open up again with the same pages open).

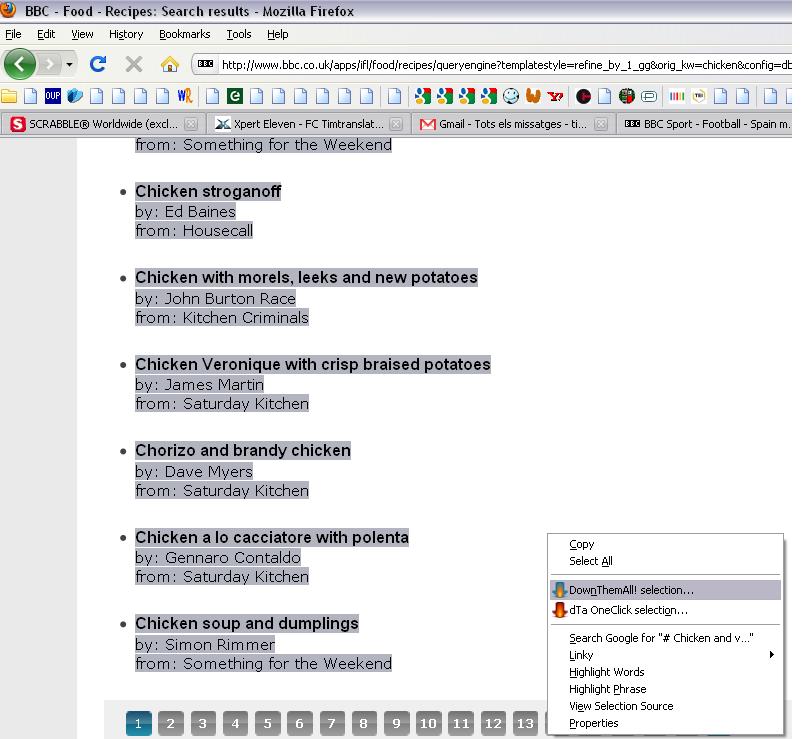

DownThemAll! allows us to download all the links we have selected on a page. If we go to the BBC Recipes page and enter “chicken” into the search box, we are taken to this page. From here, we could download all 15 recipes by selecting the recipes, then right-clicking and selecting “DownThemAll selection…”, as shown below (click on pictures to enlarge).

On the next screen you could then click on “All files”, select the folder to save the files to and click on “Start”. The problem with this method, however, is that we can only do 15 recipes at a time.

Downloading from Google

Google can display up to 100 results simultaneously (if anyone finds a search engine that makes it possible to display more results, please leave a comment), and we can target our search on the folder of the BBC website containing all the recipes, as follows:

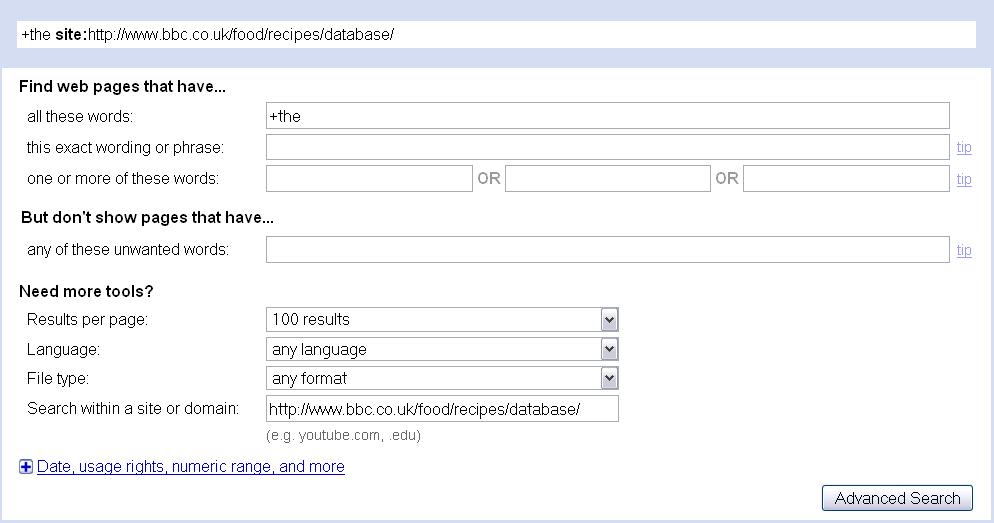

- Open a new tab (press ctrl+t), open up Google, and go to “Advanced search”.

- Type +the as your search term (the plus sign tells Google to search for the word exactly as it is written, and not to ignore it as a frequent word). This should ensure we get a good range of types of recipe. If you wanted only fish or chicken recipes, then you could search for “fish” or “chicken” instead.

- Change the number of results per page to 100.

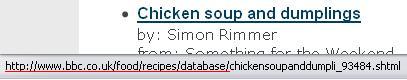

- We need to tell Google to search only within the folder containing the recipes. If you go back to the previous tab, where we searched for “chicken” in the BBC database, and move your mouse over one of the links to a recipe, you will see this folder, as shown below:

- The part I have underlined in red in the above image appears in the URLs of all the recipes. This is what we will type into Google in the “Search within a site or domain” field. Our Google search should thus appear as follows:

- Execute the search.

Downloading the pages

- On the results page, do not select anything, do a right-click, and click on “DownThemAll!…”.

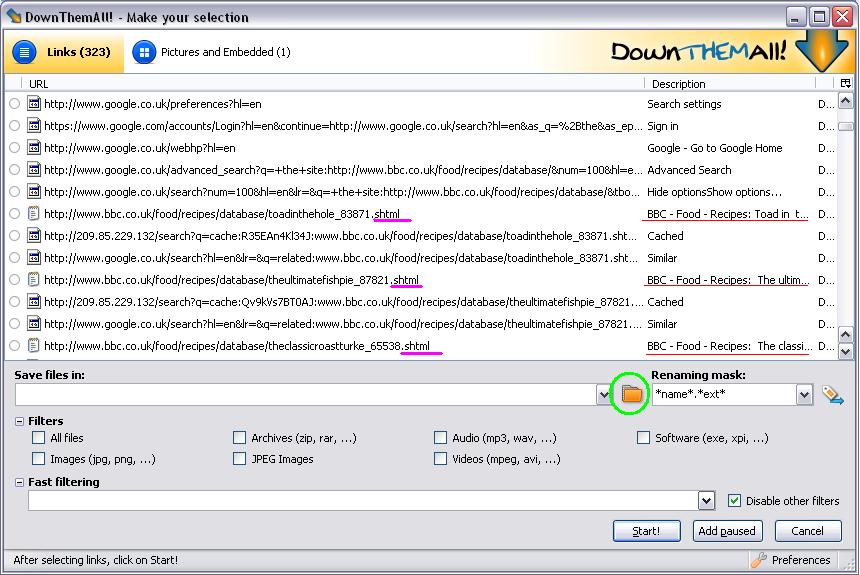

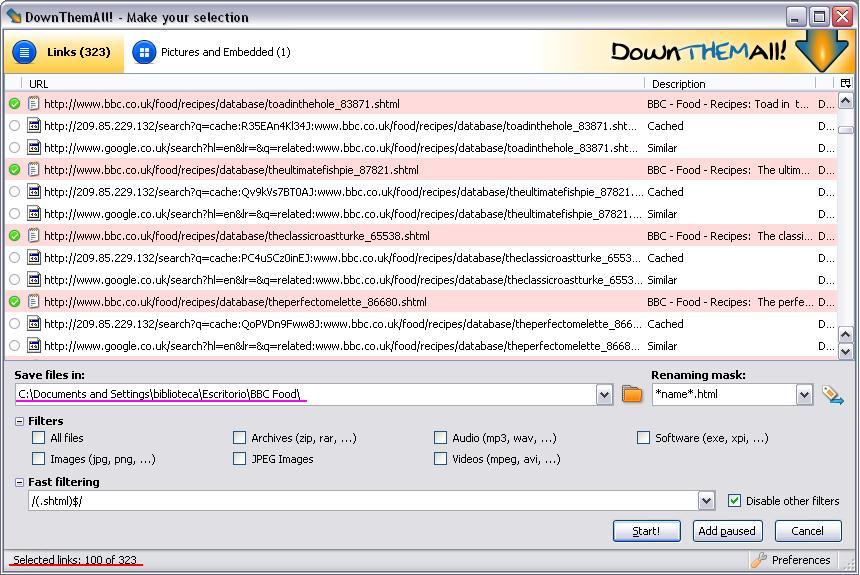

- In the DownThemAll! window, scroll down until you can see some of the links to the actual recipes, i.e. those links with descriptions resembling those underlined in red below:

We need to find a way of downloading only the recipes, and not the Google Images, Videos, Maps, etc. links, nor the “Cached” and “Similar” links, nor any other links other than the recipes. To do this we shall use the “Fast filtering” option.

- Disable all the filters (“All files”, “Images”, etc.).

- Click on the plus sign next to “Fast filtering”

- In the “Fast filtering” box, click on the drop-down list and select the /(.mp3)$/ option. You can find more on the syntax used in the Help files, but basically this option is to select only mp3 files. In this example we want to download only “shtml” files, since our recipes contain this file extension (see the pink underlines above). Select the letters “mp3” and change it to “shtml”, since all the files we want to download have the shtml file extension. The filter should now read /(.shtml)$/

- We are going to use the renaming mask. The default mask (*name*.*ext*) means that pages will be saved with their current name and extension, so we would have files such as “theclassicroastturke_65538.shtml”. We are going to change the extension to “html”, since this will make it easier to clean our files once we’ve downloaded them. To do this, change the mask to *name*.html

- Click on the folder (circled above in green) to select where you want to save the files. Make sure you create a new folder, since we’ll be downloading hundreds of files!

- Your window should now look like the picture below, with the exception of the folder path (underlined below in pink), which depends on where you want to save the files. At the bottom of the window, as underlined below in red, it should say that you have 100 links selected.

- Click on the “Start!” button, which will bring up the download window, and start the download.

- Minimise the download window and go back to your Google search results in Firefox, then scroll to the bottom of the page and click on the number 2 to bring up results 101-200.

- Once this page has opened, do a right-click, but this time click on “dTa OneClick!” instead of “DownThemAll!”. This will start downloading results 101-200, but using the same settings as for the previous download, so this time you won’t see the settings window. After about five seconds you should see the 100/100 in the download window change to 100/200.

- You can go to the third page of Google results without waiting for the second page of results to stop downloading. Scroll down and click on the number 3, then once the page is opened, select the “dTa OneClick!” option again to download results 201-300.

- Again scroll down to the bottom, but this time we’re going to speed things up by opening the next results pages in new tabs. Click on the numbers 4 to 10 one-by-one with the middle button (scroll wheel) of your mouse, or if you don’t have this button, hold the Ctrl key on the keyboard while you click on them. Go to the first of the new tabs and select “dTa One Click!”, then do the same for each of the remaining new tabs.

Google will not let us access more than 1,000 results, but 1,000 texts will give us a pretty good-sized corpus. If you want more than 1,000 texts, then try searching for another term (such as “chicken”) and downloading again. To avoid duplicates, save to the same folder, and if the “Filename conflict” box comes up, click on Skip/Cancel and select “Just for this session”. Once you have done this, all subsequent duplicates will be ignored.

Converting to plain text

If you open one of the files you’ve downloaded in Notepad, you’ll see that the files are not very clean, and are full of html code. However, programs exist to clean this. If you use Windows, you can clean this with the appropriately named HTML2TXT (please add a comment if you know of a tool that does the same thing for another operating system):

- First, create a new folder somewhere to which we will export the cleaned files.

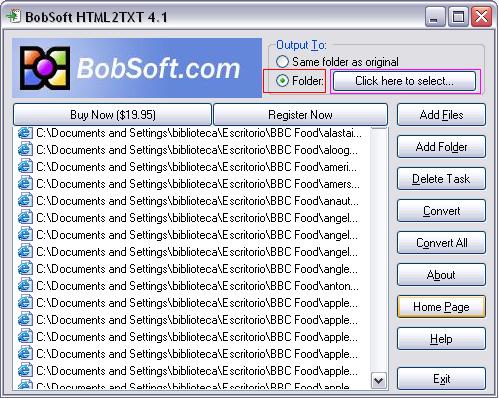

- Download and install Bobsoft’s HTML2TXT from here.

- When you launch the program, the “Unregistered Copy” window will appear. Click on “Try”.

- Click on “Add folder”, and the select the folder where you’ve saved the recipes. (Don’t try “Add files”, as there are too many files for this.)

- Click on the option to save the cleaned files to a new folder (highlighted in red below), then select “Click here to select” (highlighted in pink below) and choose the folder you created in the first step of this section.

- Click on “Convert All” to convert the files. Don’t panic if the window freezes and you get a “Not Responding” message. Just be patient.

- In the new folder you will find the cleaned txt files.

Because we’ve used the demo of HTML2TXT, you will find a short message at the top of each cleaned file. This shouldn’t be a problem for most uses of corpus analysis tools (unless you want, say, accurate word counts), but if you do want to completely clean the files, you can remove this message using cheap batch find/replace tools such as FileMonkey (cost $29).

If anybody knows of free tools that do the same as HTML2TXT or FileMonkey, please leave a comment.

You now have an almost-clean corpus of recipes that you can analyse using corpus-analysis tools such as AntConc .

These instructions can be adapted to create other corpora, but certain changes will be necessary. For example, we will not always have a single file extension (such as shtml) for all the files we want to download. I hope to add further tutorials explaining how to adapt this method for other corpora, at which point I will add a link to the bottom of this page.

Please use the comments section if you have any questions or comments to make about these instructions.

Norton for another year…

Last week I announced I wouldn’t be renewing my Norton subscription. Well I definitely won’t be renewing for another year now, because after someone from Norton found my article, I received an apology and was given a free upgrade to Norton 10, with a one-year subscription. I now no longer get the false hit. I am willing to give credit where credit is due, and I thank the member of staff for his very helpful support.